Preface¶

Congratulations on purchasing Scyld ClusterWare, the most scalable and configurable Linux Cluster Software on the market. This guide describes how to install Scyld ClusterWare using Penguin’s installation repository. You should read this document in its entirety, and should perform any necessary backups of the system before installing this software. You should pay particular attention to keeping a copy of any local configuration files.

The Scyld ClusterWare documentation set consists of:

The Installation Guide containing detailed information for installing and configuring your cluster.

The Release Notes containing release-specific details, potentially including information about installing or updating the latest version of Scyld ClusterWare.

The Administrator’s Guide and User’s Guide describing how to configure, use, maintain, and update the cluster.

The Programmer’s Guide and Reference Guide describing the commands, architecture, and programming interface for the system.

These product guides are available in two formats, HTML and PDF. You can browse the documentation on the Penguin Computing Support Portal at https://www.penguincomputing.com/support/documentation.

Once you have completed the Scyld ClusterWare installation, you can view the HTML and PDF documentations in /var/www/html/, or visit http://localhost/clusterware-docs/ and http://localhost/clusterware-docs.pdf in a web browser. Note that if you are visiting the web page from a computer other than the cluster’s master node, then you must change localhost to the master node’s hostname. For example, if the hostname is “iceberg”, then you may need to use its fully qualified name, such as http://iceberg.penguincomputing.com/clusterware-docs/ and http://iceberg.penguincomputing.com/clusterware-docs.pdf.

Note: If your reseller pre-installed Scyld ClusterWare on your cluster, you may skip these installation instructions and visit the User’s Guide and Reference Guide for helpful insights about how to use Scyld ClusterWare.

Scyld ClusterWare System Overview¶

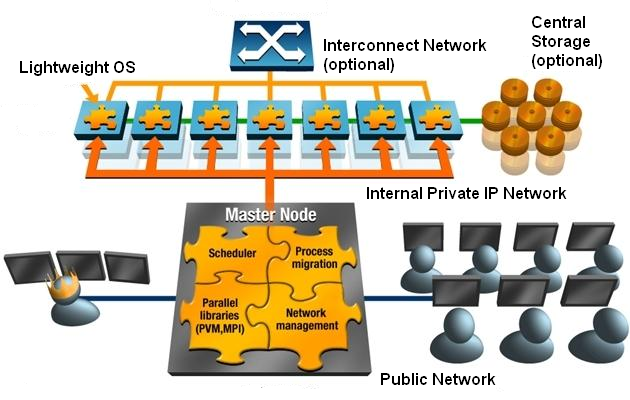

Scyld ClusterWare streamlines the processes of configuring, running, and maintaining a Linux cluster using a group of commodity off-the-shelf (COTS) computers connected through a private network.

The front-end “master node” in the cluster is configured with a full Linux installation, distributing computing tasks to the other “compute nodes” in the cluster. Nodes communicate across a private network and share a common process execution space with common, cluster-wide process ID values.

A compute node is commonly diskless, as its kernel image is downloaded from the master node at node startup time using the Preboot eXecution Environment (PXE), and libraries and executable binaries are transparently transferred from the master node as needed. A compute node may access data files on locally attached storage or across NFS from an NFS server managed by the master node or some other accessible server.

In order for the master node to communicate with an outside network, it needs two network interface controllers (NICs): one for the private internal cluster network, and the other for the outside network. It is suggested that the master node be connected to an outside network so multiple users can access the cluster from remote locations.

Cluster Layout

Figure 1. Cluster Configuration