Quick Start Installation¶

Introduction¶

Scyld ClusterWare is supported on Red Hat Enterprise Linux 7 (RHEL7) and CentOS7. This document describes installing on Red Hat, though installing on CentOS will be identical, except where explicitly noted. Scyld ClusterWare is installed on the master node after installing a RHEL7 or CentOS7 base distribution. You must configure your network interface and network security settings to support Scyld ClusterWare.

The compute nodes join the cluster without any explicit installation. Having obtained a boot image via PXE, the nodes are converted to a Scyld-developed network boot system and seamlessly appear as part of a virtual parallel computer.

This chapter introduces you to the Scyld ClusterWare installation procedures,

highlights the important steps in the Red Hat installation that require

special attention, and then steps you through the installation process.

Installation is done using the /usr/bin/yum command, installing from

a repository of rpms, typically across a network connection.

See Detailed Installation Instructions for more detailed instructions.

Refer to the Red Hat documentation for information on installing

RHEL7.

Network Interface Configuration¶

Tip

To begin, you must know which interface is connected to the public network and which is connected to the private network. Typically, the public interface is eth0 and the private interface is eth1.

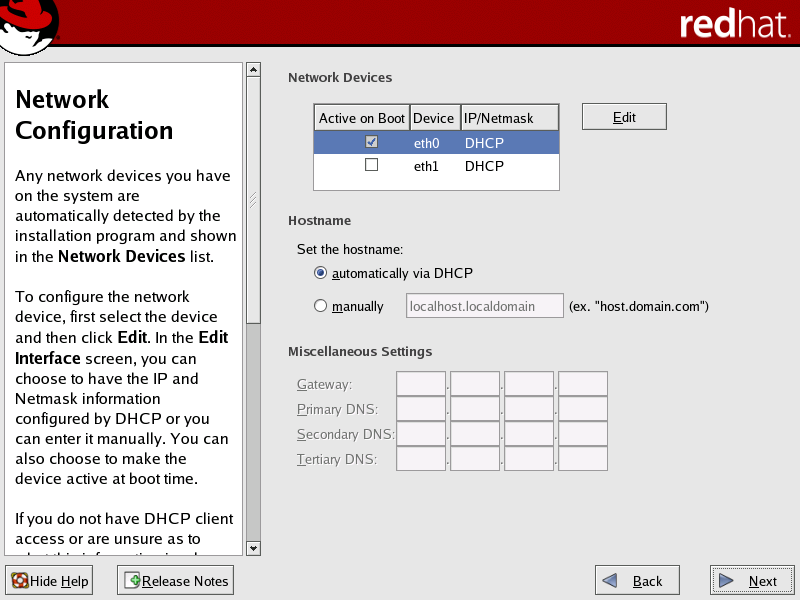

It is important to properly configure the network interfaces to support Scyld ClusterWare. The Network Configuration screen is presented during the RHEL7 installation; it can be accessed post-installation via the Applications -> System Settings -> Network menu options.

Cluster Public Network Interface¶

For the public network interface (typically eth0), the following settings are typical, but can vary depending on your local needs:

DHCP is the default, and is recommended for the public interface.

If your external network is set up to use static IP addresses, then you must configure the public network interface manually. Select and edit this interface, setting the IP address and netmask as provided by your Network Administrator.

If you use a static IP address, the subnet must be different from that chosen for the private interface. You must set the hostname manually and also provide gateway and primary DNS IP addresses.

Tip

When configuring the network security settings (see Network Security Settings), Scyld recommends setting a firewall for the public interface.

Figure 1. Public Network Interface Configuration

Cluster Private Network Interface¶

Caution

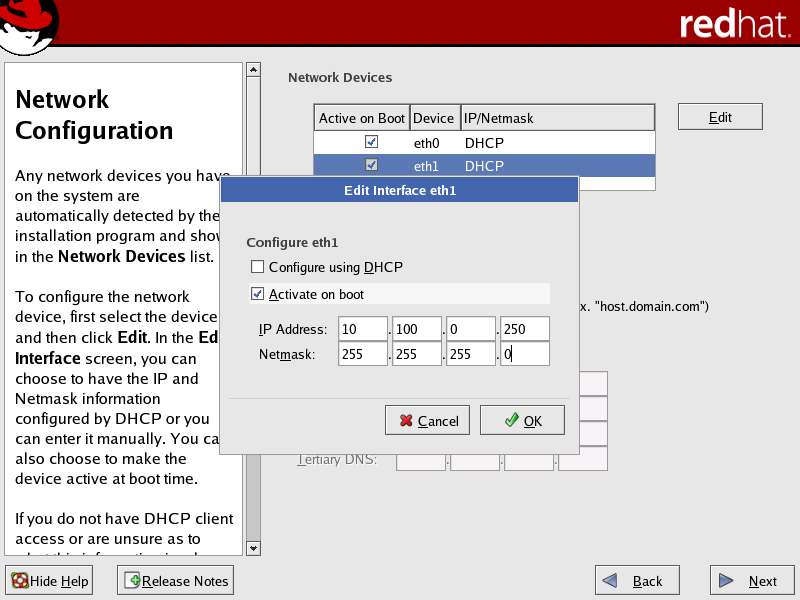

For the private network interface (typically eth1), DHCP is shown as default, but this option cannot be used. You must configure the network interface manually and assign a static IP address and netmask.

Caution

The cluster will not run correctly unless the private network interface is trusted. You can set this interface as a “trusted device” when configuring the network security settings post-installation; see Trusted Devices.

For the cluster private interface (typically eth1), the following settings are required for correct operation of Scyld ClusterWare:

Do not configure this interface using DHCP. You must select this interface in the Network Configuration screen and edit it manually in the Edit Interface dialog.

Set this interface to “activate on boot” to initialize the specific network device at boot-time.

Specify a static IP address. We recommend using a non-routable address (such as 192.168.x.x, 172.16.x.x to 172.30.x.x, or 10.x.x.x).

If the public subnet is non-routable, then use a different non-routable range for the private subnet (e.g., if the public subnet is 192.168.x.x, then use 172.16.x.x to 172.30.x.x or 10.x.x.x for the private subnet).

Once you have specified the IP address, set the subnet mask based on this address. The subnet mask must accommodate a range large enough to contain all of your compute nodes.

Figure 2. Private Network Interface Configuration

Tip

You must first select the private interface in the Network Configuration screen, then click

Editto open the Edit Interface dialog box.Tip

Although you can edit the private interface manually during the Red Hat installation, making this interface a “trusted device” must be done post-installation.

Network Security Settings¶

Caution

The security features provided with this system do not guarantee a completely secure system.

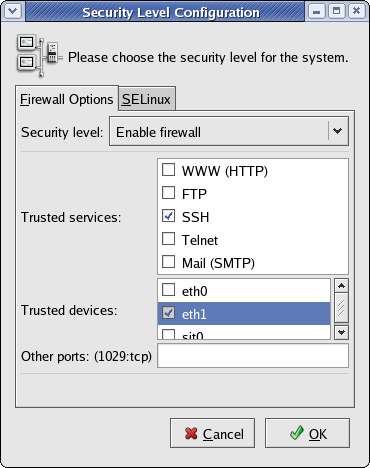

The Firewall Configuration screen presented during the RHEL7 installation applies to the public network interface and should be set according to your local standards.

The RHEL7 installer allows you to select some, but not all, of the security settings needed to support Scyld ClusterWare. The remaining security settings must be made post-installation; see Trusted Devices.

Scyld has the following recommendations for configuring the firewall:

Set a firewall for the public network interface (typically eth0).

If you chose to install a firewall, you must make the private network interface (typically eth1) a “trusted device” to allow all traffic to pass to the internal private cluster network; otherwise, the cluster will not run correctly. This setting must be made post-installation.

The Red Hat installer configures the firewall with most services disabled. If you plan to use SSH to connect to the master node, be sure to select SSH from the list of services in the Firewall Configuration screen to allow SSH traffic to pass through the firewall.

Red Hat RHEL7 or CentOS7 Installation¶

Scyld ClusterWare depends on the prior installation of certain RHEL7 or

CentOS7 packages from the base distribution. Ideally, each Scyld ClusterWare rpm

names every dependency, which means that when you use /usr/bin/yum

to install Scyld ClusterWare, yum attempts to gracefully install those dependencies

if the base distribution yum repository (or repositories) are accessible

and the dependencies are found. If a dependency cannot be installed,

then the Scyld installation will fail with an error message that

describes what rpm(s) or file(s) are needed.

Caution

Check the Scyld ClusterWare Release Notes for your release to determine whether you must update your Red Hat or CentOS base installation. If you are not familiar with the

yumcommand, see Updating Red Hat or CentOs Installation for details on the update procedures.

Scyld ClusterWare Installation¶

Scyld ClusterWare is installed using the Penguin Yum repository http://updates.penguincomputing.com/clusterware/. Each Scyld ClusterWare release is continuously tested with the latest patches from Red Hat and CentOS7. Before installing or updating your master node, be sure to visit the Support Portal to determine if any patches should be excluded due to incompatibility with ClusterWare. Such incompatibilities should be rare. Then, update RHEL7 or CentOS7 on your master node before proceeding (excluding incompatible packages if necessary) with installing or updating your Scyld ClusterWare.

Configure Yum To Support ClusterWare¶

The Yum repo configuration file for Scyld ClusterWare must be downloaded from the Penguin Computing Support Portal and properly configured:

Login to the Support Portal at https://www.penguincomputing.com/support.

Click on Download your Yum repo file to download this

clusterware.repo file and place the it in the /etc/yum.repos.d/

directory.

Set the permissions:

[root@scyld ~]# chmod 644 /etc/yum.repos.d/clusterware.repo

With this setup complete, your master node is ready to retrieve Scyld ClusterWare installations and updates.

Install ClusterWare¶

You can use Yum to install ClusterWare and all updates up to and including the latest ClusterWare release, assuming you have updated your RHEL7 or CentOS7 base distribution as prescribed in the ClusterWare Release Notes.

Verify the version you are running with the following:

[root@scyld ~]# cat /etc/redhat-release

This should return a string similar to “Red Hat Enterprise Linux Server release 7.9” or “CentOS Linux release 7.9.2009 (Core)”.

Install the Scyld ClusterWare script that simplifies installing (and later updating) software, then execute that script:

[root@scyld ~]# yum install install-scyld [root@scyld ~]# install-scyld

Configure the network for Scyld ClusterWare: edit

/etc/beowulf/configto specify the cluster interface, the maximum number of compute nodes, and the beginning IP address of the first compute node. See the remainder of this guide and the Administrator’s Guide for details.Reboot your system.

To verify that ClusterWare was installed successfully, do the following:

[root@scyld ~]# uname -r

The result should match the specific ClusterWare kernel version noted in the Release Notes.

Trusted Devices¶

If you chose to install a firewall, you must make the private network interface (typically eth1) a “trusted device” to enable all traffic on this interface to pass through the firewall; otherwise, the cluster will not run properly. This must be done post-installation.

After you have installed Red Hat and Scyld ClusterWare, reboot the system and log in as “root”.

Access the security settings through the Red Hat Applications -> System Settings -> Security Level menu options.

In the Security Level Configuration dialog box, make sure the private interface is checked in the “trusted devices” list, then click OK.

Tip

If you plan to use SSH to connect to the master node, be sure that SSH is checked in the “trusted services” list.

Figure 3. Security Settings Post-Installation

You are now ready to boot and configure the compute nodes, as described in the next section.

Compute Nodes¶

In a Scyld cluster, the master node controls booting, provisioning, and operation of the compute nodes. You do not need to explicitly install Scyld ClusterWare on the compute nodes.

Scyld requires configuring your compute nodes to boot via PXE and using the auto-activate node options, so that each node automatically joins the cluster as it powers on. Nodes do not need to be added manually.

If you are not already logged in as root, log into the master node using the root username and password.

Use the command bpstat -U in a terminal window on the master node to

view a continuously updated table of node status information.

Set the BIOS on each compute node to boot via PXE. Using the auto-activate option with PXE booting allows each node to automatically boot and join the cluster as it powers on.

Node numbers are initially assigned in order of connection with the master node. Boot the compute nodes by powering them up in the order you want them to be numbered, typically one-by-one from the top of a rack downwards (or from the bottom up). You can reorder nodes later as desired; see the Administrator’s Guide.

The nodes transition through the boot phases. As the nodes join the

cluster and are ready for use, they will be shown as “Up” by the

bpstat -U command.

The cluster is now fully operational with disk-less compute nodes. See Cluster Verification Procedures for more about bpstat and node states.